基于深度学习的单应性矩阵估计方法外文翻译资料

2021-12-18 23:06:44

英语原文共 14 页

2019 年 2 月 26 日

Deep Image Homography Estimation

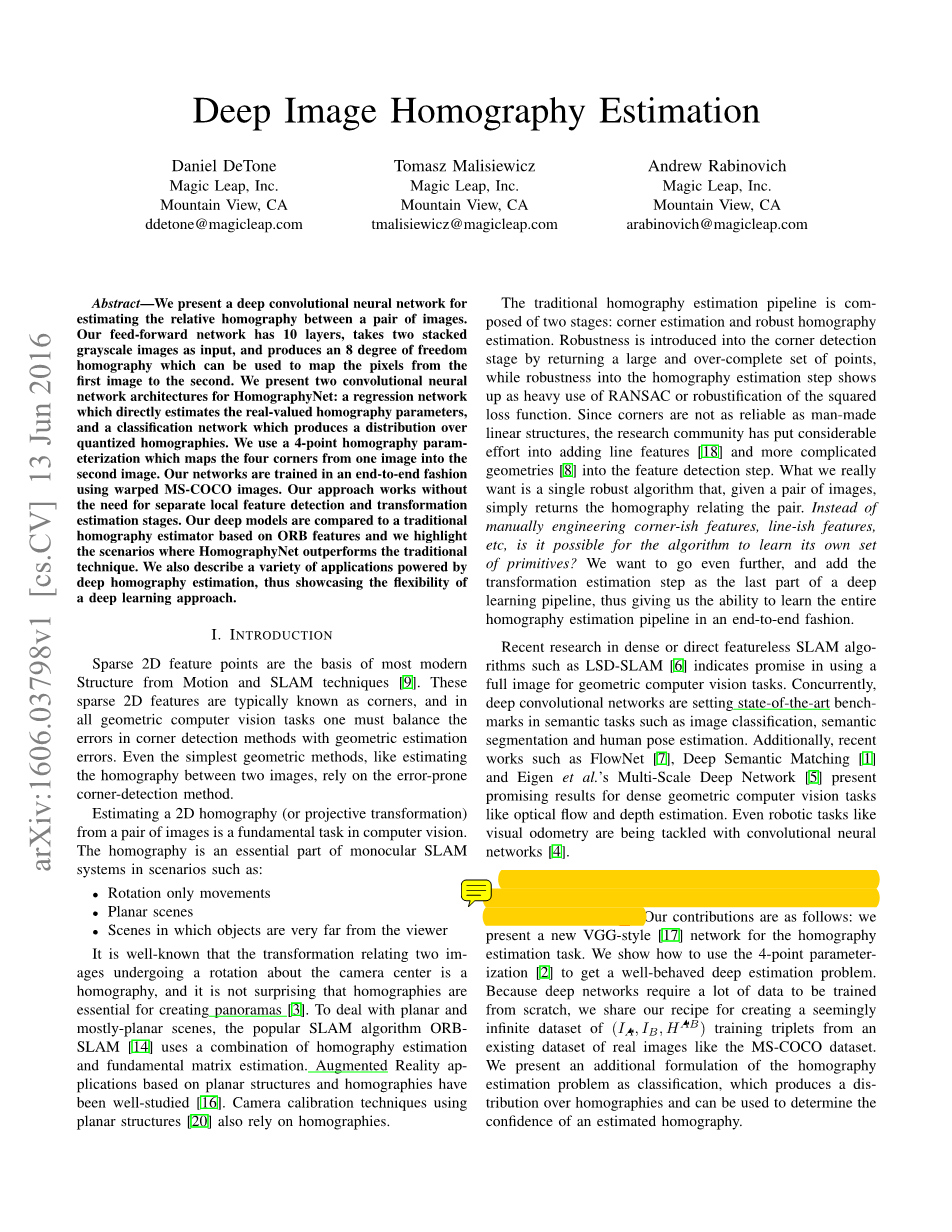

Abstract —We present a deep convolutional neural network for estimating the relative homography between a pair of images. Our feed-forward network has 10 layers, takes two stacked grayscale images as input, and produces an 8 degree of freedom homography which can be used to map the pixels from the first image to the second. We present two convolutional neural network architectures for HomographyNet: a regression network which directly estimates the real-valued homography parameters, and a classification network which produces a distribution over quantized homographies. We use a 4-point homography parameterization which maps the four corners from one image into the second image. Our networks are trained in an end-to-end fashion using warped MS-COCO images. Our approach works without the need for separate local feature detection and transformation estimation stages. Our deep models are compared to a traditional homography estimator based on ORB features and we highlight the scenarios where HomographyNet outperforms the traditional technique. We also describe a variety of applications powered by deep homography estimation, thus showcasing the flexibility of a deep learning approach.

摘要--我们提出了一个深度卷积神经网络来估计一对图像之间的单应性矩阵。我们的前馈网络有10层,将两个堆叠的灰度图像作为输入,并生成8个自由度单应矩阵,可用于将像素从第一个图像映射到第二个图像。我们为HomographyNet提出了两种卷积神经网络架构:一种直接估计实值单应性参数的回归网络,以及产生量化单应性分布的分类网络。我们使用4点单应性参数化将四个角点从一个图像映射到第二个图像。我们的网络以端到端的方式使用变形的MS-COCO图像进行训练。我们的方法不需要单独的局部特征检测和变换估计阶段。我们的深层模型与基于ORB特征的传统单应性估计器相比较,我们强调了HomographyNet优于传统技术。我们还描述了深度单应性估计的各种应用,从而展现了深度学习方法的灵活性。

I. INTRODUCTION

Sparse 2D feature points are the basis of most modern Structure from Motion and SLAM techniques [9]. These sparse 2D features are typically known as corners, and in all geometric computer vision tasks one must balance the errors in corner detection methods with geometric estimation errors. Even the simplest geometric methods, like estimating the homography between two images, rely on the error-prone corner-detection method.

Estimating a 2D homography (or projective transformation) from a pair of images is a fundamental task in computer vision. The homography is an essential part of monocular SLAM systems in scenarios such as:

- Rotation only movements

- Planar scenes

- Scenes in which objects are very far from the viewer

It is well-known that the transformation relating two images undergoing a rotation about the camera center is a homography, and it is not surprising that homographies are essential for creating panoramas [3]. To deal with planar and mostly-planar scenes, the popular SLAM algorithm ORB-SLAM [14] uses a combination of homography estimation and fundamental matrix estimation. Augmented Reality applications based on planar structures and homographies have been well-studied [16]. Camera calibration techniques using planar structures [20] also rely on homographies.

The traditional homography estimation pipeline is composed of two stages: corner estimation and robust homography estimation. Robustness is introduced into the corner detection stage by returning a large and over-complete set of points, while robustness into the homography estimation step shows up as heavy use of RANSAC or robustification of the squared loss function. Since corners are not as reliable as man-made linear structures, the research community has put considerable effort into adding line features [18] and more complicated geometries [8] into the feature detection step. What we really want is a single robust algorithm that, given a pair of images, simply returns the homography relating the pair. Instead of manually engineering corner-ish features, line-ish features, etc, is it possible for the algorithm to learn its own set of primitives? We want to go even further, and add the transformation estimation step as the last part of a deep learning pipeline, thus giving us the ability to learn the entire homography estimation pipeline in an end-to-end fashion.

Recent research in dense or direct featureless SLAM algorithms such as LSD-SLAM [6] indicates promise in using a full image for geometric computer vision tasks. Concurrently, deep convolutional networks are setting state-of-the-art bench marks in semantic tasks such as image classification, semantic segmentation and human pose estimation. Additionally, recent works such as FlowNet [7], Deep Semantic Matching [1] and Eigen et al. rsquo;s Multi-Scale Deep Network [5] present promising results for dense geometric computer vision tasks like optical flow and depth estimation. Even robotic tasks like visual odometry are being tackled with convolutional neural networks [4].

In this paper, we show that the entire homography estimation problem can be solved by a deep convolutional neural network (See Figure 1). Our contributions are as follows: we present a new VGG-style [17] network for the homography estimation task. We show how to use the 4-point parameterization [2] to get a well-behaved deep estimation problem. Because deep networks require a lot of data to be trained from scratch, we share our recipe for creating a seemingly infinite dataset of (I A , I B , H AB ) training triplets from an existing dataset of real images like the MS-COCO dataset. We present an additional formulation of the homography estimation problem as classification, which produces a distribution over homographies and can be used to determine the confidence of an estimated homography.

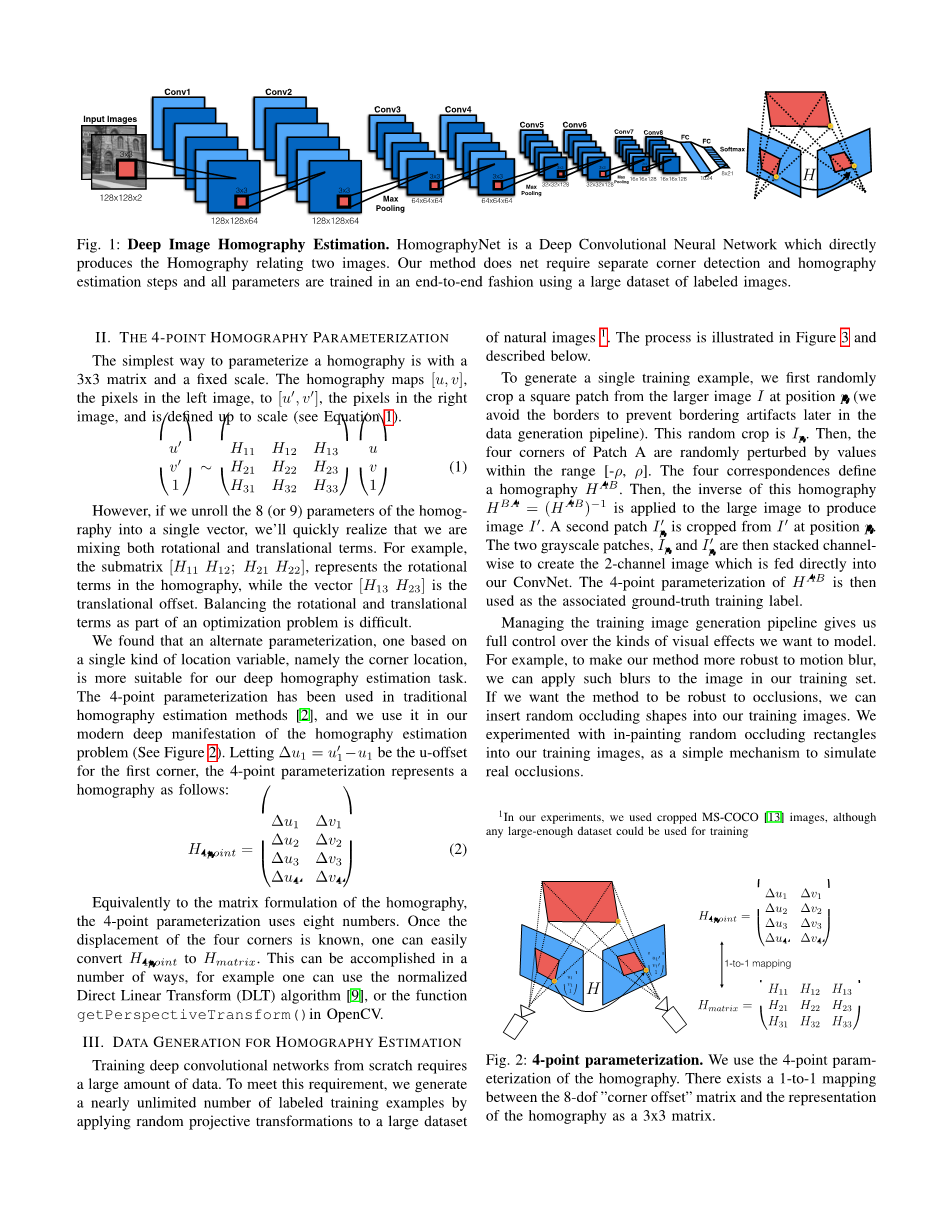

II. THE 4-POINT HOMOGRAPHY PARAMETERIZATION

The simplest way to parameterize a homography is with a 3x3 matrix

资料编号:[4510]